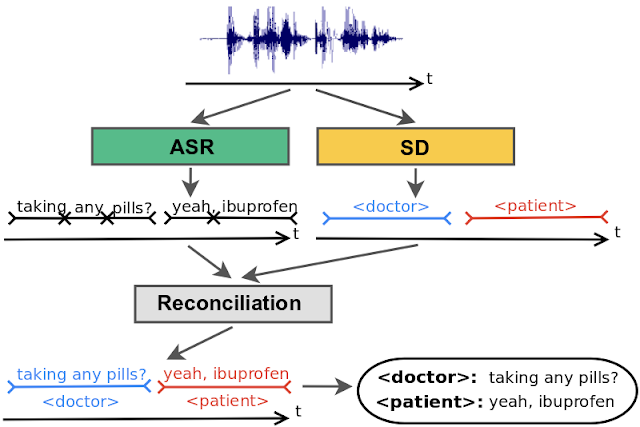

If you don't have the domain-specific text and at least 10,000 words of audio transcript text, upload that text as training data. The text files that you use as training data or tuning data depend on whether you have domain-specific text data or audio transcript data. I'm not exactly sure what the inputted data should look like, as the AWS documentation did not provide examples, some additional text I found provides some context: You need to provide your Custom Langauge Model (CLM) and Training or Tuning data. I have not had time to prepare a Custom Langauge Model (CLM) but I suspect this might give me perfect accuracy. This is important if you are working in a specific domain such a medicine, law or even tech. It is my assumption these corrections via Otter AI's editor is used to better train their model and could explain why their results are a bit more accurate at least compared with a general modelīoth platforms support custom vocabulary, so if you have some tricky words this will increase accuracy.Īmazon Transcribe one significant advantage is the ability to have a Custom Model. Otter AI also allows you to edit the transcription before you export it, Amazon Transcribe has no such feature. Without doing any fancy tweaking, dropping an audio clip into both Otter AI and Amazon Transcribe, Otter AI at a glance appears to have performed better than Amazon Transcribe. So here it's great to see AWS doesn't cap your limit. One thing that is not clear is what happens when you hit 100 hours in Otter AI and you need more than 100 hours in a month.

Aws transcribe pricing update#

I might come back and update this article with any findings. I did not evaluate Google Speech and Azure Speech-to-Text because my primary workload runs on AWS. Evaluationįor the rest of this article I am comparing Amazon Transcribe and Otter.ai and here are the things I noticed with spending about 20 mins with each platform. So I thought it time to reevaluate integration with AI transcription back into my learning platform. I uploaded an example of a troublesome audio clip and I was blown away by the quality of the audio. So having a transcription will be a very good supportive learning aid in this use case. Paired with the fact that the particular technical topic is hard to grasp, you'll be pausing and rewinding three times over.

I have new courses from other content creators joining my learning platform, and one specific course has audio quality issues. Someone has mentioned they were using Otter.ai for speech to text and had me thinking of a recent issue. The transcribers had a really hard time with cloud services names and terminology, and to manually correct was not worth it. I had tried Amazon Transcribe, Google Speech and Azure Speech-to-Text and they were all not accurate enough, even with a custom vocabulary. Also, I wanted to highlight the spoken word alongside the video as it played. I had looked into transcribing my cloud certification courses about a year ago so that within my learning platform users could see a body of text, click any word and jump to the part of the video.

0 kommentar(er)

0 kommentar(er)